GarmentLab provides realistic simulation for diverse garments with different physical propoerties, benchmarking various novel garment manipulation tasks in both simulation and the real world.

Manipulating garments and fabrics has long been a critical endeavor in the development of home-assistant robots. However, due to complex dynamics and topological structures, garment manipulations pose significant challenges. Recent successes in reinforcement learning and vision-based methods offer promising avenues for learning garment manipulation. Nevertheless, these approaches are severely constrained by current benchmarks, which exhibit offer limited diversity of tasks and unrealistic simulation behavior. Therefore, we present \textbf{GarmentLab}, a content-rich benchmark and realistic simulation designed for deformable object and garment manipulation. Our benchmark encompasses a diverse range of garment types, robotic systems and manipulators. The abundant tasks in the benchmark further explores of the interactions between garments, deformable objects, rigid bodies, fluids, and human body. Moreover, by incorporating multiple simulation methods such as FEM and PBD, along with our proposed sim-to-real algorithms and real-world benchmark, we aim to significantly narrow the sim-to-real gap. We evaluate state-of-the-art vision methods, reinforcement learning, and imitation learning approaches on these tasks, highlighting the challenges faced by current algorithms, notably their limited generalization capabilities. Our proposed open-source environments and comprehensive analysis show promising boost to future research in garment manipulation by unlocking the full potential of these methods. We guarantee that we will open-source our code as soon as possible. You can watch the videos in supplementary files to learn more about the details of our work.

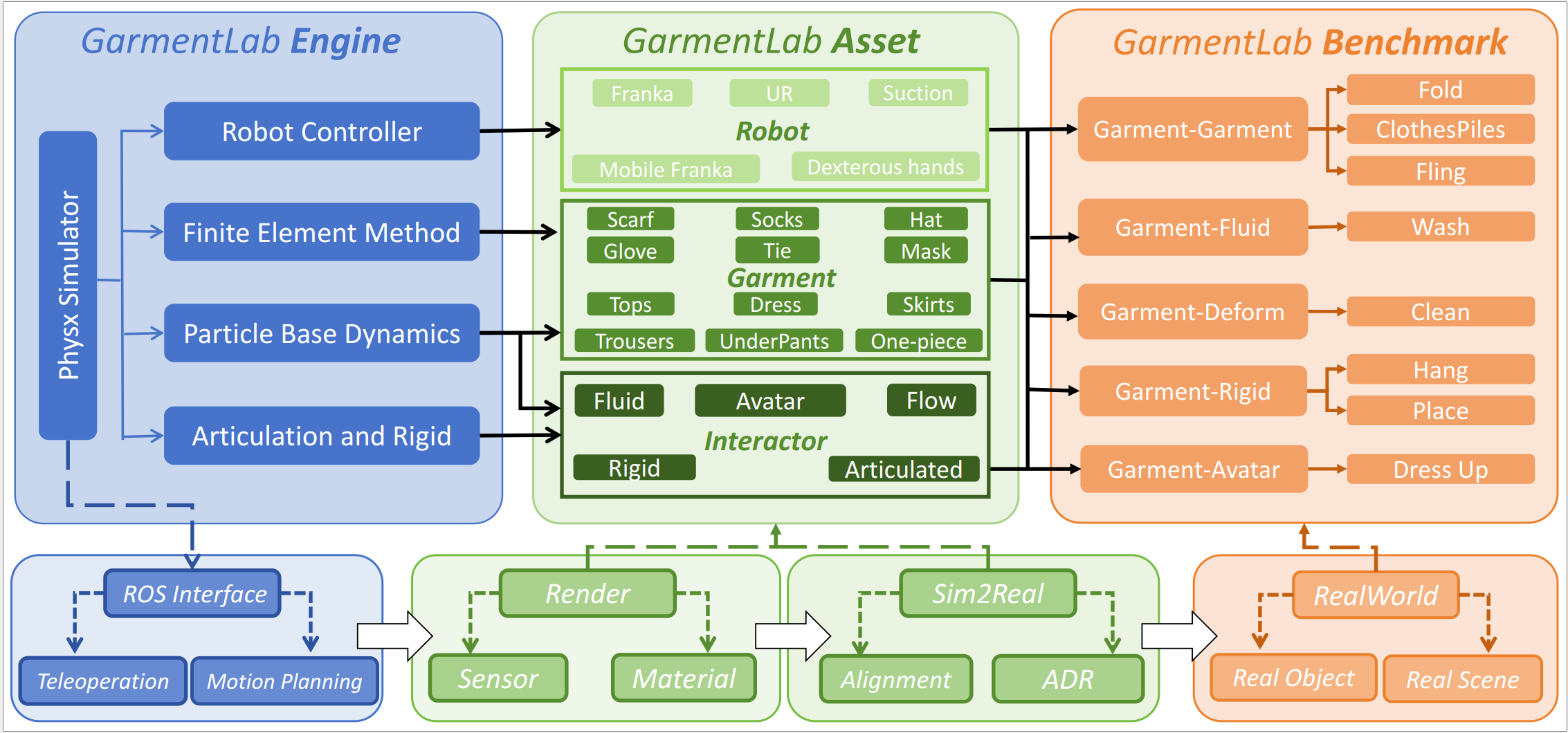

Built on PhysX5, our environment supports various simulation methods. (Middle) Our environment can deliver realistic simulations of diverse robots, garments, and interactions between multiple physics media. (Right) Subsequently, we can utilize these assets to construct tasks across various categories. (Bottom) The framework supports real-world deployment

GarmentLab explores the potential of different simulation methods, and provides different physical parameters, modeling the distinct properties of different materials in the real world

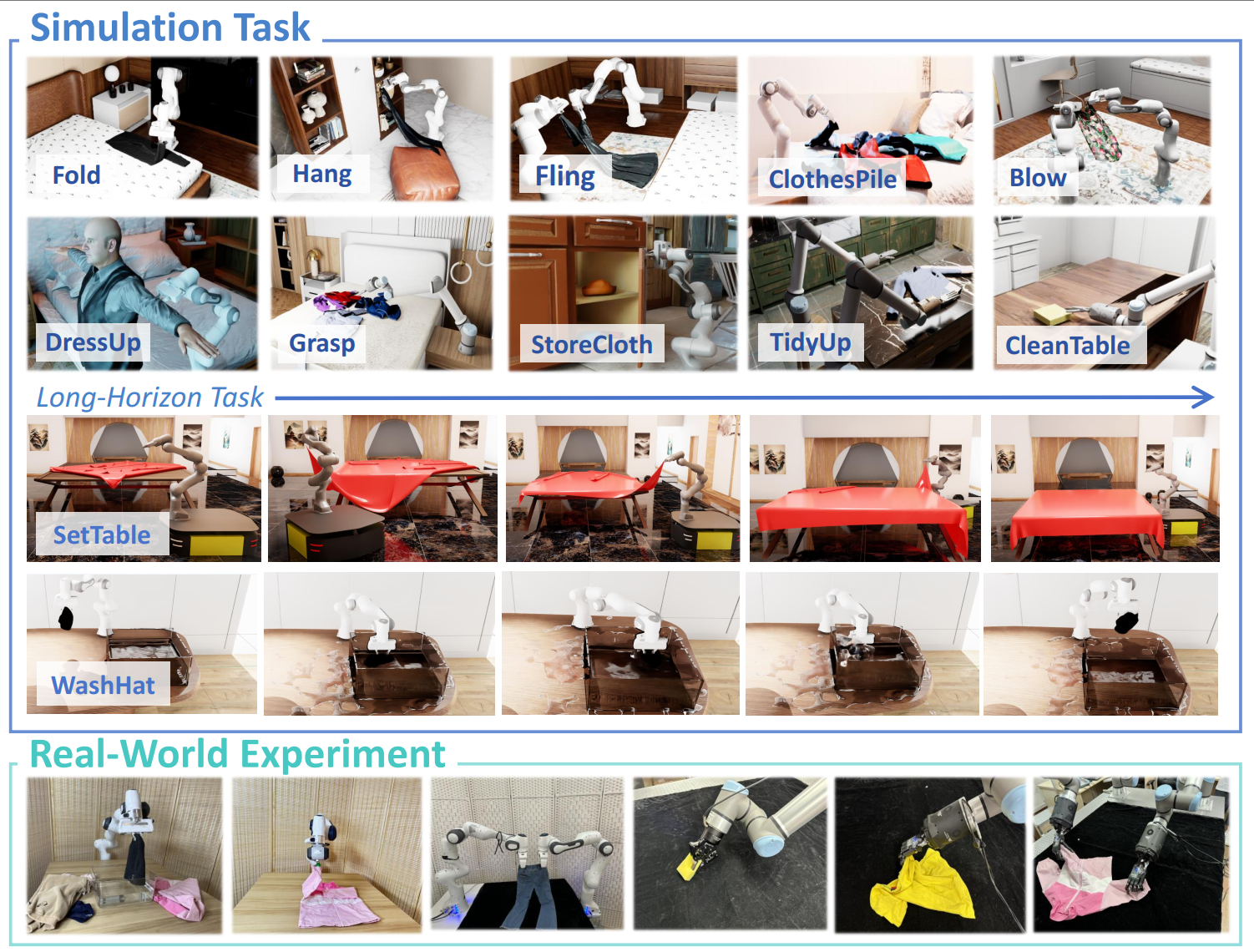

We introduced 20 garment and deformable manipulation tasks including complicated long-horizon tasks. The last row shows the execution of these tasks in the real world

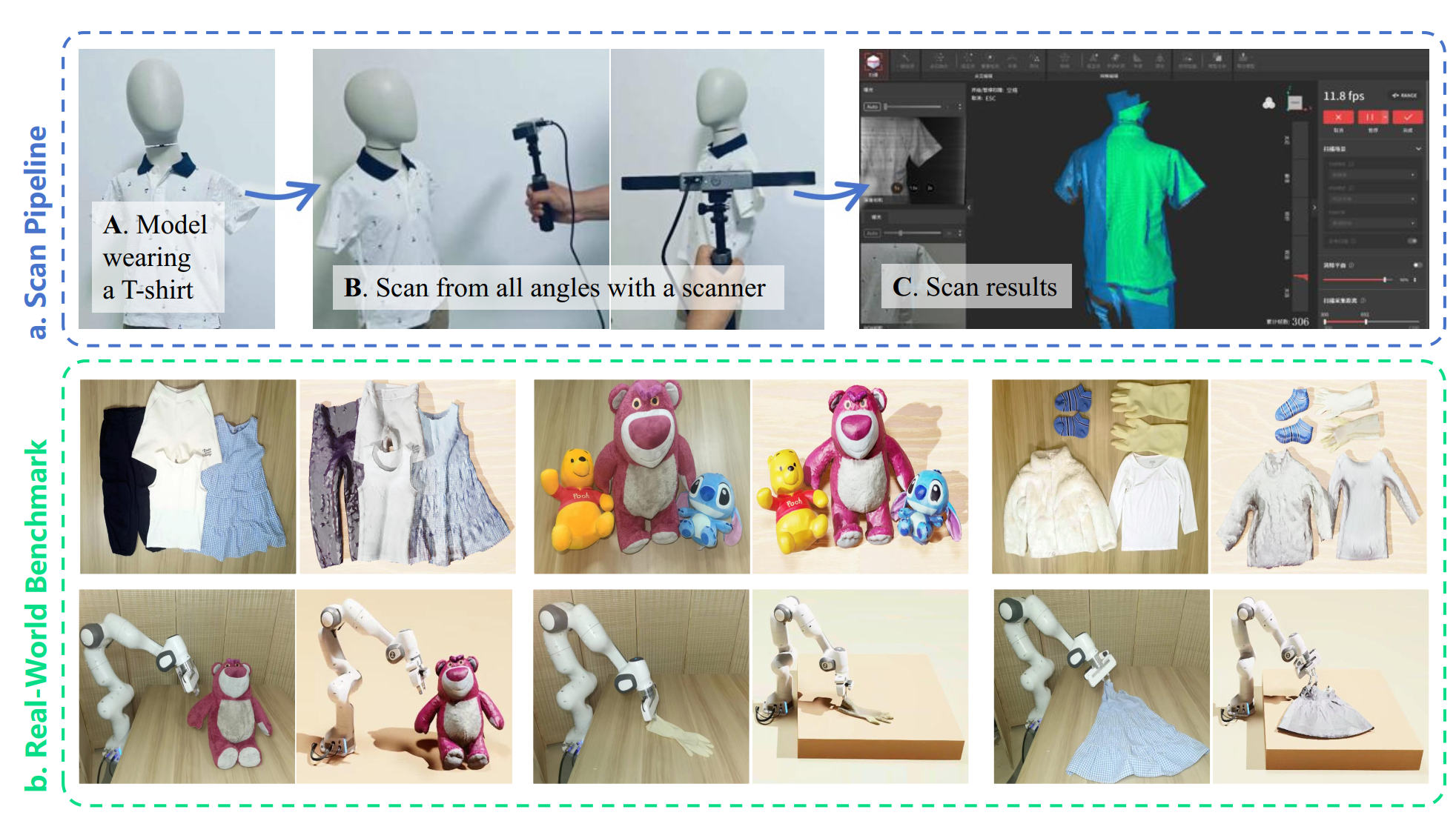

Part a demonstrates the whole pipeline of converting real-world objects into simulation assets. Part b demonstrates the performance of different categories of objects in both simulation and the real world (the first row), and the results of these objects being manipulated by the robot (the second row).

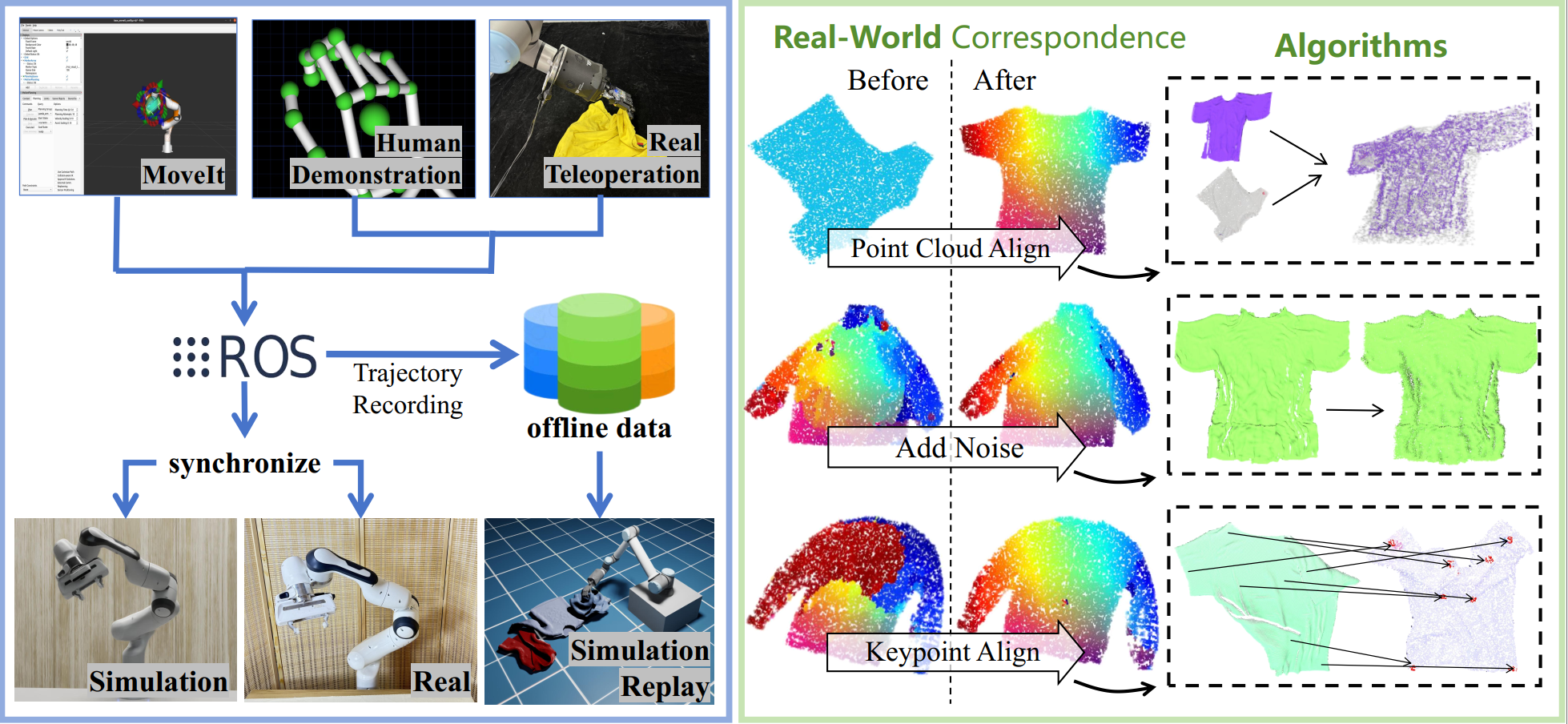

On the left, we highlight our MoveIt and teleoperation pipeline, a lightweight and easy-to-deploy system built using ROS. On the right, we present our three proposed visual sim-to-real algorithms, demonstrating a significant improvement in model performance after deploying these algorithms.

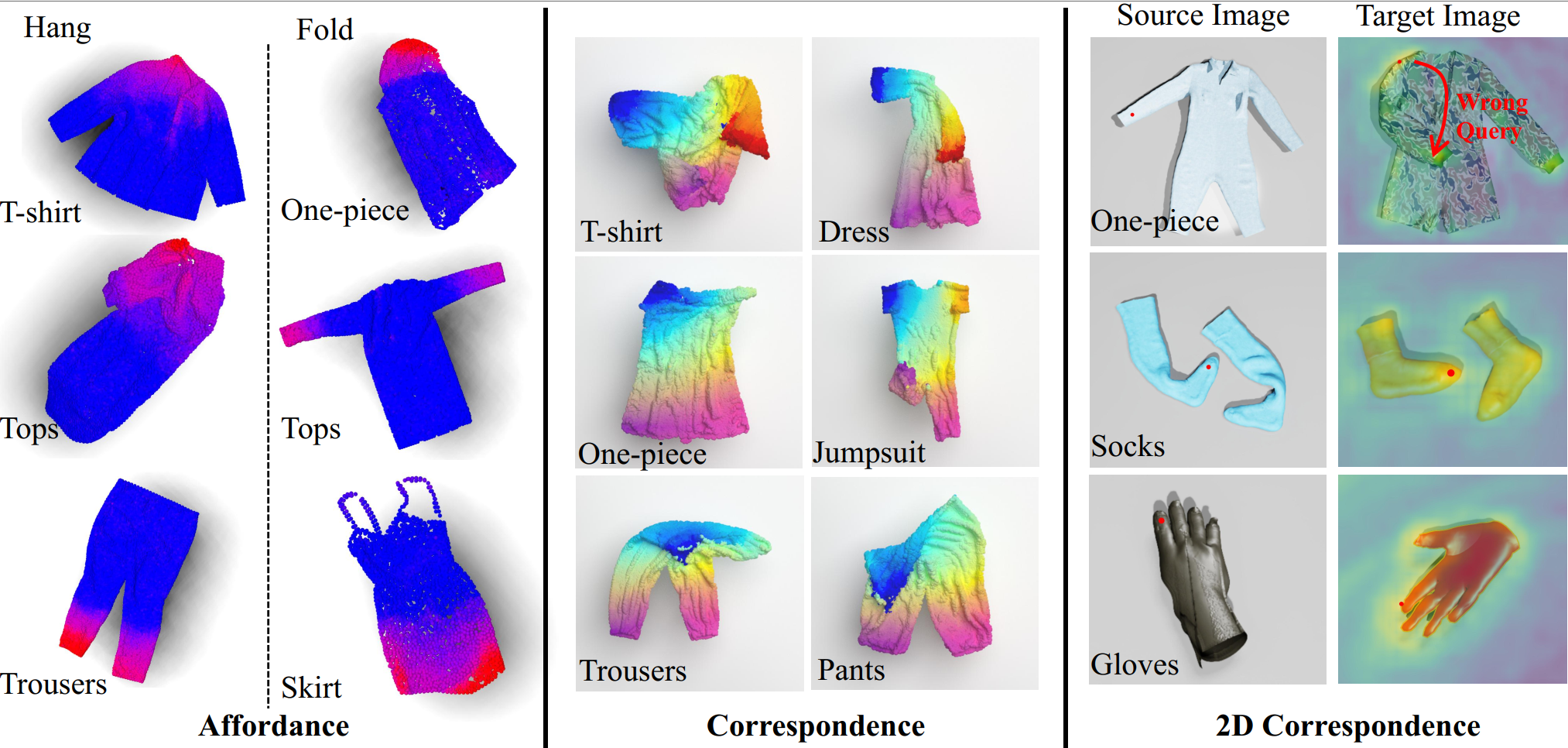

We visualize the qualitative results of the three vision-based algorithms: the left, middle, and right sections of this image correspond to the Affordance, Correspondence, and DIFT algorithms, respectively. Note that the DIFT exhibits query errors. For detailed analysis, please refer to Experiment Section.

@inproceedings{lu2024garmentlab,

title={Garmentlab: A Unified Simulation and Benchmark for Garment Manipulation},

booktitle={Advances in Neural Information Processing Systems},

author={Lu, Haoran and Wu, Ruihai and Li, Yitong and Li, Sijie and Zhu, Ziyu and Ning, Chuanruo and Shen, Yan and Luo, Longzan and Chen, Yuanpei and Dong, Hao},

year={2024}

}